Another Buzzword?

The term “Quantum Computing” has been one of the most popular buzzwords of 2018. And rightly so, it has already found many applications in almost every field out there, from Physics to Medicinal Sciences, even AI. Everybody can use some “quantum computing” like they are using the computers (referred to as “classical” from now on) in some way or the other. It has all the potential to disrupt every industry out there.

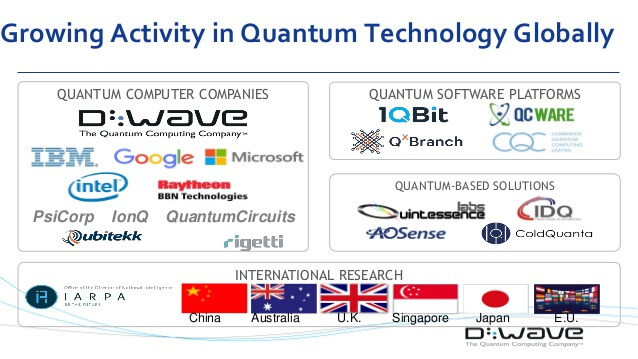

Some of the world’s most influential companies like Google, Microsoft, IBM, Intel and the world’s Governments are making significant investments and more and more people are gearing up to be the first one to get their hands on a quantum computer.

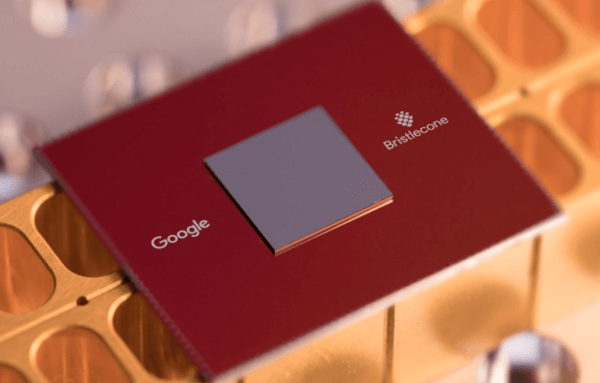

Image: Google’s Bristlecone Processor

Image: Google’s Bristlecone Processor

Though quantum computers exist today, the race is to make a commercially viable one. But certainly, it is very difficult to avoid all the hype.

Get Started

Some recent developments in quantum computing technology lead to some of the news articles showing up in my news feed, and it brought back, a cool video on quantum computing that I saw on YouTube a couple of years back - here it is. When I saw this, I was in awe and intrigued.

Quantum computing is nothing but computing using various quantum-mechanical phenomena. Talking of quantum mechanics, Richard Feynman, one of the greatest physicist and educator and one of the founding fathers of quantum computing, said - “Nobody understands quantum mechanics”. Here is a video. Perhaps he meant that you don’t need to understand it to be able to tinker with the ideas and possibilities.

Quantum mechanics has been around for quite a while now and yet it is a very new field where people are proposing new ideas, revealing new things and solving problems. We do not know if this is the ultimate correct model to describe the universe and the atoms but we do know that this is how things work in nature, not like we previously thought. And that is what puts us out of our comfort zone in going deep into the world of quantum mechanics.

“Nature isn’t classical, dammit, and if you want to make a simulation of nature, you’d better make it quantum mechanical, and by golly it’s a wonderful problem, because it doesn’t look so easy.” - Richard P. Feynman

The Limits of Classical Computers

As you know that in the past few years computers have grown exponentially in their ability to compute, Moore’s law will end soon, how are we going to fulfill the increasing need for computing power for Artificial Intelligence, simulations and for solving more complex problems in cryptography?

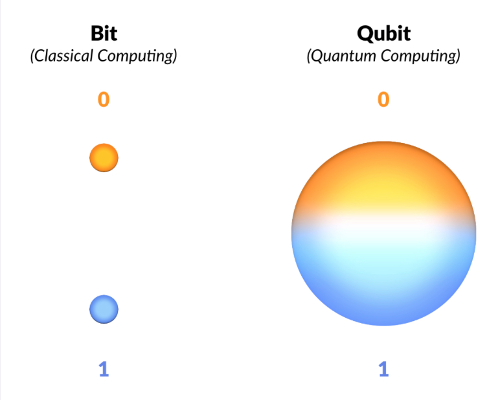

In our day to day life, we use devices which use the binary number system, simply because it is easier to realize it. Only two states are possible, ON means 1 and OFF means 0. All the information is represented in a sequence of these “bits” that can only exist in state 1 or 0 at a given instant in time. We manipulate these sequences using logic gates and transistors to perform calculations like addition and multiplication.

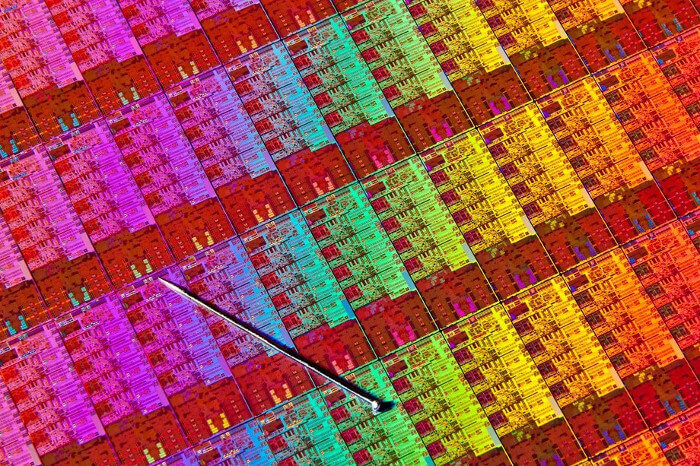

As we go smaller and smaller with the size of transistors reaching 14nm today, fitting almost 1.4 billion of them on an Intel Core i7 CPU (Haswell). At a very small scale, a strange phenomenon named “Quantum Tunnelling” is observed. The electrons teleport to the other side of the transistor without actually passing through. We cannot control their flow now. The basic physical phenomena describing the working of computers collapse at this scale and now we need to get our heads around quantum mechanics so that we can go even smaller as well as more powerful.

Image: Intel 22nm Haswell Die

Image: Intel 22nm Haswell Die

We need quantum computing to be able to push the envelope or else we will soon reach the apogee.

Quantum Computing

In quantum computing, you have something called a “qubit”. It has a value that can be 0 or 1. So what is so different here? A qubit can have a value that is either 0 or 1 only when we determine (see) it, but, it can be in a state where it has a probability associated with being either 0 or 1 upon being seen when we’re not seeing it. This is called Superposition.

If you have heard about the “Schrödinger’s Cat” then you know what I am saying, if you don’t then I highly recommend watching this.

As explained above, the qubit is proportions of both 0 and 1 at the same time. The moment we see it to determine its value, we get the outcome as only one state that is either 0 or 1.

A qubit exists in two states simultaneously, this allows us to perform many computation tasks parallelly on a scale that can’t be performed on a classical computer.

4 classical bits = 16 possible states, only 1 state at a given time,

0000 0001 0010 0011

0100 0101 0110 0111

1000 1001 1010 1011

1100 1101 1110 1111

4 qubits = all 16 states at a given time. With only 20 qubits we can have 1,048,576 states in parallel at the same time! We can search databases lighting fast, do complex numerical calculations in fraction of a second, and decipher codes that are considered uncrackable with the computers we have today.

Applications

For some of the classical computing problems, quantum computing can make a dramatic difference.

Machine Learning and AI - Quantum computing can accelerate machine learning algorithms and data analysis.

Cryptography - One of the fields which will be severely revolutionized by the advent of quantum computing. With quantum computing, data will be much more secure no matter where it is stored, one such example is quantum key distribution.

Medicine and Materials - Chemists need to evaluate the interactions between molecules, proteins, and chemicals to see if medicines will improve certain conditions or cure diseases, but due to the extraordinary amount of combinations that are analyzed, this is time and labor intensive. Since quantum computers can review multiple molecules, proteins and chemicals simultaneously, they make it possible for chemists to determine viable drug options quicker.

Weather Forecasting and Climate Change Predictions - Weather data analysis and modelling will help meteorologists to have a much better idea of when bad weather will strike to alert people and ultimately save lives.

Big Data - Searching big data is a problem we’re destined to face and find a solution to when the data gets bigger. Quantum computers can search the database significantly less time than classical computers.

Some of the fields of applications include:

Aerospace Engineering: Optimal mesh partitioning for finite elements

Biology: Phylogeny reconstruction

Chemical Engineering: Heat exchanger network synthesis

Chemistry: Protein folding

Civil Engineering: Equilibrium of urban traffic flow

Economics: Computation of arbitrage in financial markets with friction

Electrical Engineering: VLSI layout

Environmental Engineering: Optimal placement of contaminant sensors

Financial Engineering: Minimum risk portfolio of given return

Game Theory: Nash equilibrium that maximizes social welfare

Mechanical Engineering: Structure of turbulence in sheared flows

Medicine: Reconstructing 3D shape from biplane Angioardiogram

Operations Research: Traveling salesperson problem

Physics: Partition function of the 3D Ising model

Politics: Shapley-Shubik voting power

Recreation: Versions of Sudoku, Checkers, Minesweeper, and Tetris

Statistics: Optimal experimental design

If you want to know more applications of quantum computing, I highly recommend this.

Conclusion

Quantum computing is in a stage where you can compare it to the early stage of computers that we use today. We have Google’s Bristlecone, a new quantum computing chip with 72 qubits, soon outdone by Rigetti’s 128 qubit system in August 2018 as the most number of qubits on a chip.

If you’ve ever thought that everything has already been done and there is not much left for you to explore, quantum mechanics and quantum computing something that you must look into.

And if you’re ready, then take the leap and start learning now.

References and Further Reading

- The Coming Quantum Leap in Computing

- Qubit Counter

- Google and NASA’s Quantum Artificial Intelligence Lab

- Quantum Computing Is Going to Change the World. Here’s What This Means for You.

- 6 Practical Examples Of How Quantum Computing Will Change Our World

- Quantum Computers Explained – Limits of Human Technology *

D-WAVE Quantum Computing - Applications *

(*) already mentioned inline